GPT AI Data Security

Data security is the foundational prerequisite for the value of GPT applications. Enterprises must establish end-to-end protection across the "input-processing-output" workflow through measures such as data isolation, access control, and audit trails.

GPT Data Security Risks

Employees using GPT-based tools face significant risks of data leakage. According to a report, approximately 25% of employees may upload sensitive data—such as company business plans, customer information, and product designs—into such AI tools.

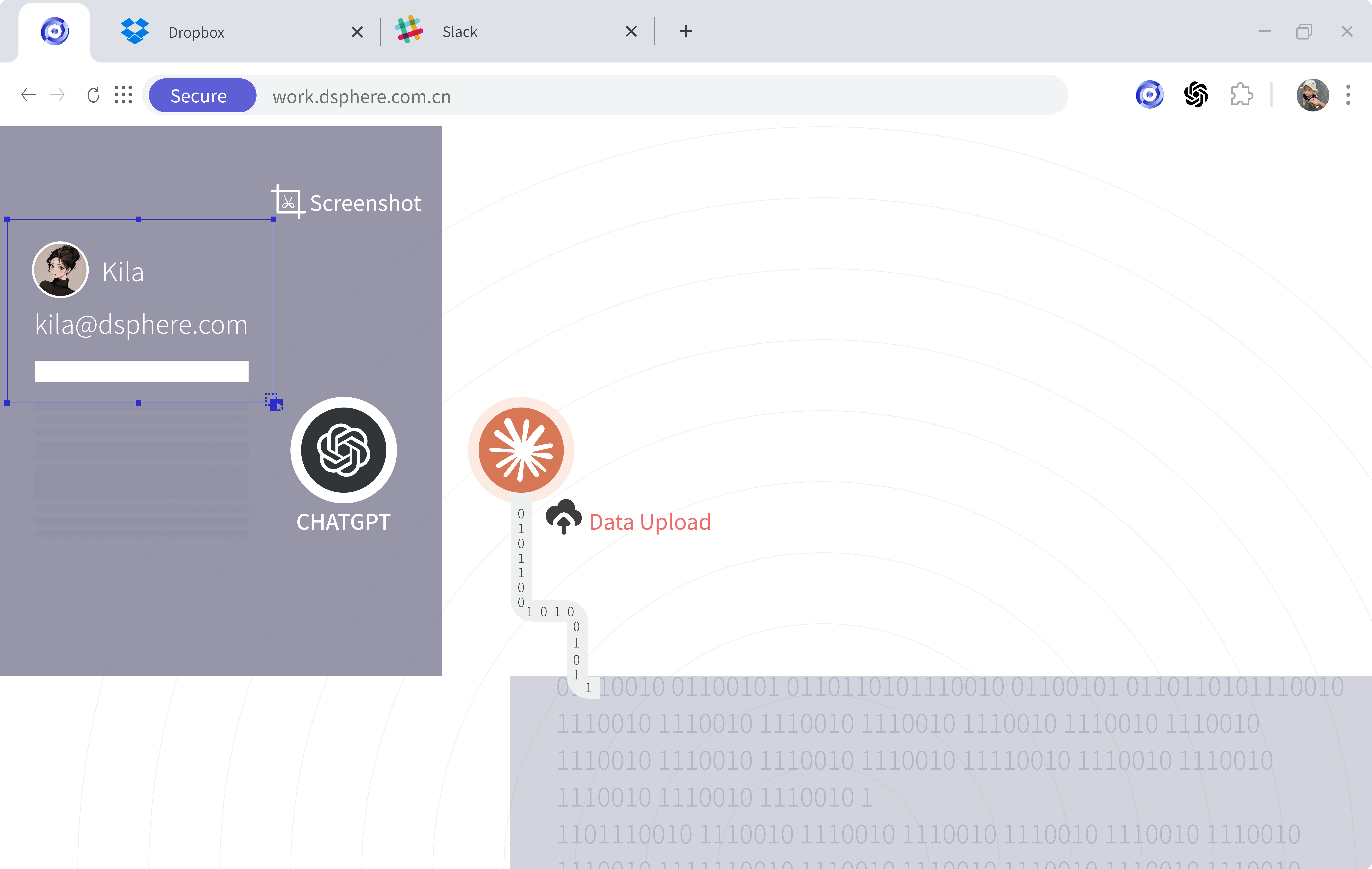

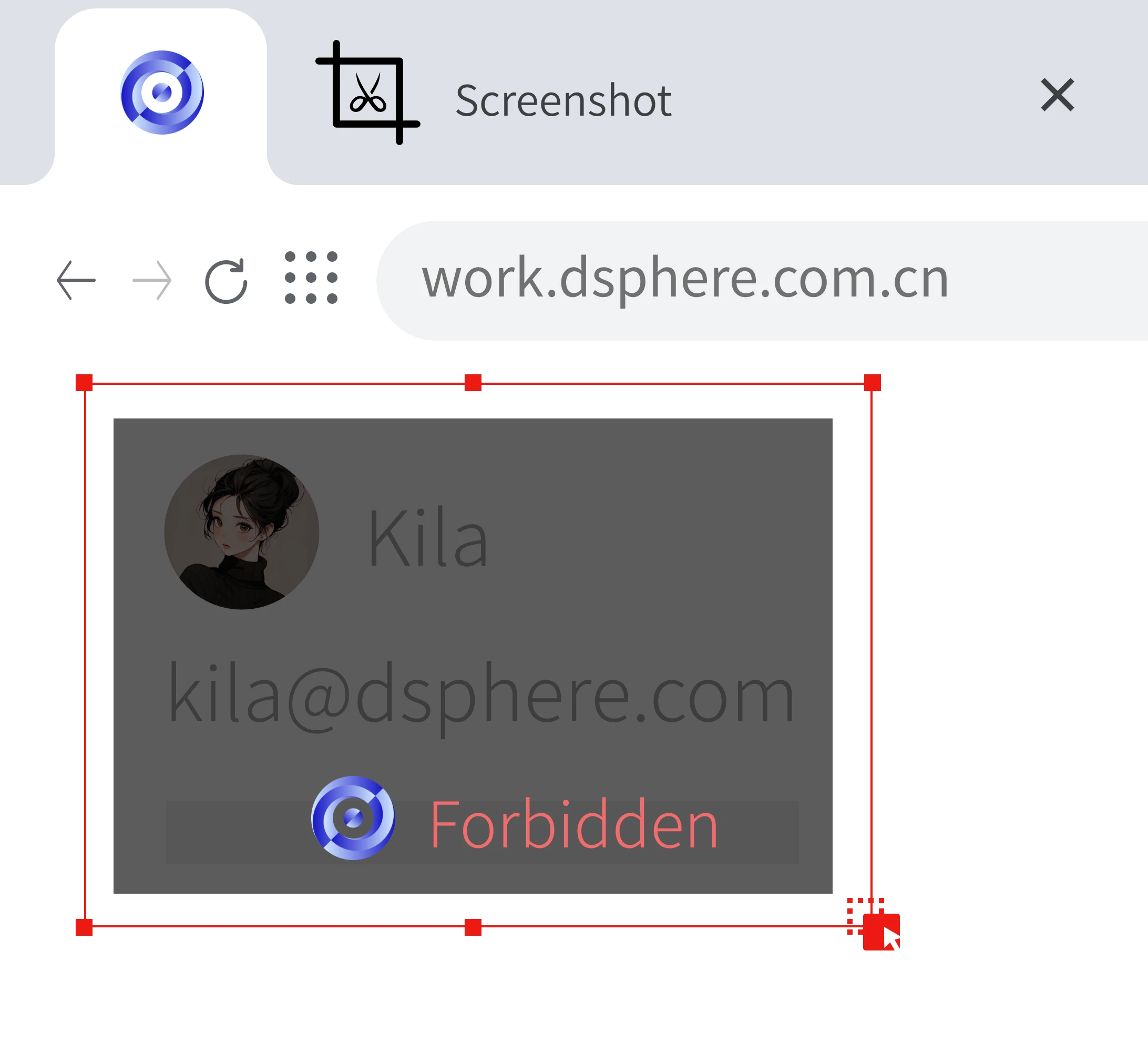

GPT Screenshot

Prohibit GPT tools from performing automatic screenshots on sensitive business systems or system pages.

Browser API Control

Enforce fine-grained control over browser APIs to prevent GPT browser plugins from freely invoking interfaces and accessing web application page data.

Free TrialBrowser Extension

Independently manage all data permissions for browser plugins, such as screen capture, data reading, data upload, and webpage modification, and appropriately define the permission scope for GPT plugins.

Free Trial

Partial Clients

Time to Rebuild Data Security

If you are still stuck in the outdated model of terminal document control from decades ago, and if data security is still being enforced at the cost of employee privacy — then it's time for a change!